Amazon Web Services(AWS) is one of the most comprehensive and widely used cloud platforms worldwide. In today’s age of digital transformations, cloud computing is playing a significant role in the implementation of business goals in real-time. As the need for cloud surges year by year and industry by industry, enterprises are turning their heads towards cloud services, and AWS is the leader of cloud computing. Now it’s quite evident that AWS became a go-to platform. Because there are so many services and so many options to do the same thing, it became really challenging for newcomers and even too few experienced folks to decide which services to choose in particular.

If you are looking to start or just started with AWS with specific demands but don’t know which service can cater to your needs, then this is the post for you. In this post, I will explain the essential AWS core services in the best possible way with the help of a hypothetical example. The motive behind this is to make you introduce to the different services of AWS.

Lets, get started.

If you prefer to watch a video instead of reading a post, check out my video below on this topic.

Hypothetical example

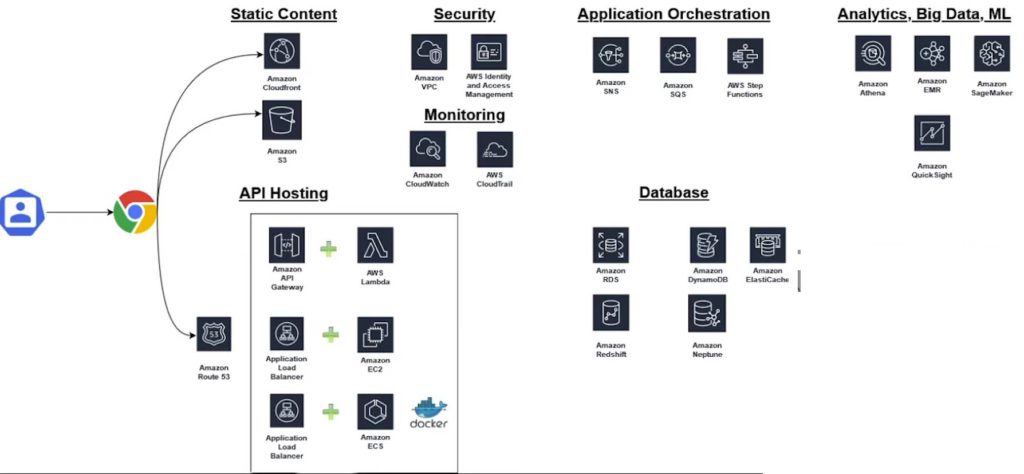

To define the core AWS services briefly, lets consider a hypothetical example where I’m making a web page that requires APIs, a database and analytical tools. In front of all that, I want to set up a static website that hosts a React web application.

During this process, there are different phases of building the base infrastructure, which include Hosting, Domain Routing, API management, Databases, Analytics, Security, Application Orchestration, Monitoring, and AI & ML capabilities. I will explain to you phase by phase the whole architecture and the relevant AWS services simultaneously.

Hosting

The first thing that we have here is the interaction of users with browser to hit the static website, so to make it accessible primarily I need to host the static content of the web app such as to host our javascript files, to host CSS & HTML files, to host SVG files, and to host images all that kind of similar stuff. For this, I need a specific AWS service that can host and store some raw objects of varying sizes.

The best solution from AWS to cater to the above goal is Amazon S3.

Amazon S3

Amazon Simple Storage Service (Amazon S3) is one of the oldest AWS services, and it is an object-level storage service that offers scalability, availability, and security for the content. Can store different objects of different sizes in dedicated storage buckets named S3 bucket. With the help of this, one can easily manage, scale, and access data globally. It is used in use cases, such as data lakes, websites, mobile applications, backup and restore, archive, enterprise applications, IoT, and big data analytics.

Now, after I successfully host and store my static content on Amazon S3, the next objective is to ensure that my users are going to get optimal performance. At the same time, they access it from anywhere in the world, and to do this, I am using the caching layer service called AWS CloudFront.

If you want to learn more about Amazon S3 in depth check out this video.

Amazon Cloudfront

CloudFront is a fast and scalable content delivery network that securely delivers your content to users across the globe with low latency and high transfer speeds. This service co-reside near to the edge caching networking locations of the users. Technically nodes are distributed worldwide via the AWS network to enhance the speed of the content delivery mechanism.

In our case, I am using cloud front in conjunction with S3 to optimize the latency for accessing my web application.

After this, the next immediate step is to manage my Domain Name System (DNS) to point my registered domain to the cloud servers and configure my API routes. To make this happen, the AWS service ‘Amazon Route 53’ comes into the picture.

Amazon Route 53

Route 53 is a highly available cloud Domain Name System (DNS) designed to give developers and businesses a pathway to route users’ interaction to web applications by connecting their registered domains names with IP addresses of cloud servers. It connects user requests with your cloud infrastructures running in AWS environment such as S3 buckets, Amazon EC2, and it is also capable of connecting infrastructure underlying outside of AWS as well.

Till now, everything is smooth and steady. Further from here, I need to host some APIs to call from our website for various use-cases that might be requesting from cloud servers.

Thankfully there are multiple options available to accomplish the same things on AWS but in a whole different way based on my objectives. I am listing few options below.

API Hosting

Amazon API Gateway

It is a fully managed service to create, publish, maintain, monitor, and secure APIs. Using this one can create RESTful APIs or use WebSocket to enable real-time two-way communications. API Gateway handles all the requests involved in accepting and processing up to thousands of API calls to access data, code logic, or functionality from your back-end services.

You may enjoy my article on Web Hosting Options on AWS.

Amazon Lambda

Lambda is a server-less environment on AWS cloud that lets users run their code without the need of provisioning or managing machines to handle workloads. AWS Lambda will scale up or down by provisioning machines on behalf of the user spin up your application ‘just in time’ to serve incoming traffic.

To create a lambda function, I simply build my code and upload it to the AWS Lambda service after creating a Function. After combining this with the API gateway, I can define my own rest endpoints, and with this, I can forward those requests from the API gateway to the lambada functions to create a REST API.

To know more about Amazon Lambda please watch this video.

Application load balancer

ALBs are used to distribute incoming traffic across multiple targets such as EC2 instances, containers, and IP addresses, in one or more Available Zones. It is elastic in nature and can add or remove machines according to the spike in the incoming traffic. This increases the availability of your web application.

Amazon EC2

EC2 is one of the oldest services offered by AWS cloud and is known as Elastic Compute Cloud. Basically one can rent virtual machines from AWS that can spin up or down with a set of resources at any time. It provides instance types to fit any kind of workload. Amazon EC2 comes under infrastructure as service (IaaS), and with this, I can configure my CPU, memory, storage, and networking capacity for my instances.

By using Amazon EC2, I can run my virtual computers to run my code to call API’s, and by combining it with Application Load Balancer, I can delegate all my incoming traffic to my available resources that are in my cluster in order to make my web application available on all kinds traffic loads.

As docker being very popular right now, let’s also look at the ways of hosting your docker images with ECS and its combination with the application load balancer. I already gave information on the Application Load Balancer, so lets jump right into the Amazon ECS.

Amazon ECS

Amazon Elastic Container Service (ECS) is a container orchestration that helps deploy, manage and monitor applications. It is serverless technology from AWS Fargate to make users free from worrying about the management of add-ons and nodes. Amazon ECS also enables businesses to build applications rapidly.

With ECS, I create my docker images, and I upload them to the elastic cloud repository to store my images. I can always ask my ECS to manage my clusters, something like ‘hey! I always want you to make sure five compute nodes of my docker are up’ and ECS will make sure they are up for me. Also, it makes sure to monitor the health checks of my clusters periodically and triggers alarms against them.

I think so far, everything is making sense from the compute perspective, but now we want to add our database layer. Again, we have to make a key decision between two different options: SQL and NoSQL, so lets briefly touch on both of them.

If you’re still struggling to understand the differences between EC2, ECS, and Lambda, check out my video below comparing the three technologies.

Databases

SQL

SQL based databases are typically selected if the end user requires heavily relational style queries. Our data models are represented as a database schema that has a strict type format. SQL databases are still enormously popular, and AWS has a dedicated service to help you build and managed your SQL instances.

Amazon RDS

Amazon Relational Database Service (RDS) is used to set up, scale, operate relational databases in the cloud. It automates administration tasks such as hardware provisioning, database setup, patching, and backups to focus on their application.

I can host my SQL databases like Microsoft SQL Server, PostgreSQL, Oracle Database, and all kinds of standard sql engines.

Amazon Aurora

It is a fully managed relational database service that is compatible with MySQL and PostgreSQL. It is a one-click solution where everything is managed for me, and it ensures that it’s passing health checks regularly to monitor cluster’s health, and it takes backups for me.

Aurora also provides better performance than typical MySQL and Postgres databases, at just a minor cost increase. A whole bunch of benefits come with using Aurora that reduce headaches when compared with a normal database instance that you manage.

Take it from me, if you’re going relational, go with Aurora.

Amazon Redshift

It is a fully managed, petabyte-scale data warehouse service in the cloud that offers business intelligence-based insights to your business and customers. Amazon Redshift is also a SQL database, and it can handle very large queries ranging from gigabytes to petabytes of volume. It performs heavy-duty BI and analytical style queries where one can apply functions like joins or time series analysis.

NoSQL

NoSQL stands for “Not Only SQL,” It is a database management approach that can possess a wide variety of data models, including key-value, document, columnar, graphs, and maps. NoSQL database is generally a non-relational database model that is distributed, flexible, and can scale horizontally.

NoSQL is the opposite of SQL – it emphasizes performance at scale over supporting relational access patterns.

There are few popular NoSQL databases available in AWS services such as Amazon DynamoDB, Amazon Elastic Cache, Amazon Neptune. However, DynamoDB is by far the most popular.

You may enjoy this article on deciding between SQL vs NoSQL for a New Project

Amazon DynamoDB

It is a fully managed NoSQL database service that provides speed and secure performance with seamless scalability. One can easily offload the administrative functions of operating and scaling a distributed database using DynamoDB; since it is fully managed, there is no need to worry about hardware provisioning, setup, configuration, replication, software patching, or cluster scaling.

Nowadays, many businesses are going with DynamoDB for their NoSQL database operations due to its ease of use and its wide applications. Infinite scalability is another attractive feature of DynamoDB especially for companies operating at very high scale.

To know more about Amazon DynamoDB please watch this video

Amazon Elasticache

It is a NoSQL-based web service that makes it easy to set up, manage, and scale a distributed in-memory data store or cache environment in the cloud. Amazon ElastiCache works with both the Redis and Memcached engines to perform the key value-based lookup.

Amazon Neptune

Amazon Neptune is a graph-based database service offered by AWS to build and run applications that work with highly connected datasets. Using this, one can build applications such as Knowledge graphs, Identity graphs, fraud detection, recommendation engines, social networking, and where it needs to create relationships between data and quickly query these relationships. It uses graphical structures such as nodes, edges, and properties to operate and store data.

The next phase of our hypothetical ecosystem is Application Orchestration.

Application Orchestration

Application Orchestration is a broad term that refers to building applications that consist of small components that perform some larger operation as a whole.

As an example of application orchestration, think about a credit card application that needs to process multiple workflow steps: authorizing the transaction, performing the transaction, and confirming the transaction was successful.

Amazon web services two main services for application orchestration, namely Amazon SNS and Amazon SQS

Amazon SNS and SQS

Amazon Simple Queue Service (SQS) and Amazon Simple Notification Service (SNS) are both messaging services within AWS that communicate asynchronously to maintain coordination between different application services.

When thinking of SNS and SQS, think PubSub. SNS is the Pub, and SQS is the sub.

To learn more about Amazon SNS VS SQS please watch this video

AWS Step Functions

AWS Step Functions is a low-code serverless visual workflow service used to orchestrate AWS services. It also lets us combine AWS Lambda functions and other AWS services with building business-critical applications, business processes in a sequential manner, and one can define their workflows using Step Functions’ graphical console in the form of series of event-driven steps.

For example, suppose my web application is an E-commerce store, then using AWS Step Functions. In that case, I can define my workflow in event-driven steps such as placing an order, ensuring the validity of credit cards for payments, and then processing and finalizing the shipments.

Analytics, Big Data & ML

Amazon Athena

It is a serverless interactive query service used to analyze data in Amazon S3 using standard SQL operations. Amazon Athena is widely used nowadays since users store data in amazon’s cost-effective S3 storage solution to store and use Athena to lookup data.

This is not going to be optimal in terms of performance. Still, it is excellent if I am looking to save a few bucks. The alternative best solution for this goal is Amazon Redshift, a more persistent, reliable analytical tool for performing analysis using SQL on a large set of data.

To learn more about Amazon Athena in depth please watch this video

Amazon Quicksight

It is a business intelligence tool under the umbrella of AWS services. It gives similar data analytics and business intelligence insights like that of Power BI or Tableau. It allows users to explore data and extract different insights without the need for coding.

Amazon Quicksight can be integrated with Amazon Redshift, Amazon Athena, local Excel files, and more to create and publish interactive BI dashboards that include Machine Learning-powered insights.

Amazon Elastic Map Reduce (EMR)

It is a managed cluster platform used to run big data frameworks for processing vast amounts of data using open source tools such as Apache Spark, Apache Hive, Apache HBase, Apache Flink, Apache Hudi, and Presto. Amazon EMR makes it easy to set up, operate, and scale your big data environments scaling even to petabytes of data.

Amazon SageMaker

It is a fully managed Machine Learning based AWS service to prepare, build, train, and deploy high-quality machine learning models, and Data Scientists and Developers mainly use it.

I often see that security is a minor concentrated phase by many folks, especially by people who are just getting started. It is quite essential to secure your cloud environment because there is a high risk of potential vulnerabilities to breach security and access your AWS account.

So you always need to make sure your application is secure both in terms of your credentials and vulnerabilities that exist on your system, for example, open ports.

Security

Amazon VPC

Amazon VPC stands for the virtual private cloud; it enables us to launch Amazon resources into a virtual network that can be defined when and how different applications can access, retrieve data or make outbound calls to the public internet and to other groups within the firewall.

With Amazon VPC, I can build a digital firewall around my AWS ecosystem, and this digital firewall ensures that my resources are separate from other people’s resources.

If I have signed up for my AWS account for the first time, then I can have a default VPC which is very liberal in terms of its settings. Still, as I am serious about my security of the AWS environment, I will make sure that it is locked down nothing and is getting in or out beyond my defined rules. Moreover, I also need to manage some networking functions like subnets, route tables, internet gateways to make it more secure.

AWS Identity Access Management (IAM)

IAM enables us to manage access to AWS services and resources securely by creating and managing AWS users and groups by defining specific roles and permissions. For applications running on AWS, using IAM services, you can manage your access controls of your employees, applications, and devices. With this, you can allow or deny permissions to access your cloud resources within your organization or even outside.

In the above example, I can define this as this lambda function has access to dynamodb. This lambda function has access to these apis on a specific table of dynamodb and can also set role-based access to access my cloud resources like admin role, editor role, or viewer role.

To know more about AWS Identity Access Management in depth please watch this video or read this article.

Finally, before I conclude, I want to talk about one last thing, a significant phase that is ‘Monitoring’ because monitoring is vital for making sure my service stays up, stays reliable, and stays healthy at all times. So let me walk you through it.

Amazon Cognito

Amazon Cognito is a massive and powerful service that provides user sign up and login functionality for your application. If you’re building an application and you want to give users the ability to register and sign in in order to interact with it, then Amazon Cognito is the service for you.

Cognito has two important features, User Pools and Identity Pools/Federated Identities.

User Pools provide user sign up, login, forgotten password, and other familiar user account flows. It also provides a hosted UI that you can redirect users to in order to sign in to your application’s user pool. You as an administrator can also link your User Pool to other social sign in providers (like Google, Facebook, Apple, etc) to offer a more convenient way to register and log in.

Identity Pools on the other hand are a way for you to grant temporary access credentials to users so that that can access a limited set of AWS services. Say for example you want a user to be able to upload a file directly to Amazon S3, you can use an Identity Pool to link the user with an IAM role that grants this permission.

This is just scratching the surface of what Amazon Cognito is capable of, but if you want to learn more you can check out my Cognito Complete Beginner Guide article here.

Monitoring

Amazon CloudWatch

Amazon Cloudwatch is a monitoring and observability service built to monitor the status of applications, respond to performance changes, optimize resource utilization, and get a unified view of operational health. CloudWatch collects data in the form of logs, metrics, and events, providing you with a unified view of AWS resources, applications, and services that run on both AWS and on-premises servers.

Cloudwatch allows us to do things like set up graphs and set up alarms on a metric that may be established or determines the health of our service. It also allows us to do things like look at logs, create log groups that group your log files together to look at them in a much more clean and cohesive way so that we can ensure that our service is always running predictably.

To know more about Amazon Cloudwatch in depth please watch this video

Amazon Cloudtrail

It enables users to governance, compliance, operational auditing, and conduct risk auditing of the AWS account. This is very useful in terms of who is doing what on your application, especially when we have an ecosystem where we a whole bunch of AWS accounts and many users to utilize them. It is kind of an audit table or an audit service that logs and tracks who is accessing what and when in your AWS ecosystem.

Conclusion

Hopefully, I have covered almost all the essential core services that come in handy in various use cases. Moreover, I also anticipate that using the above hypothetical example made you understand the baseline architecture of web applications and various AWS services that can cater to your needs according to your business goals and scenarios.

If you think this blog made you know the critical AWS core services and their use cases in real-time, then please feel free to subscribe to my newsletter, and I will see you soon with another exciting blog post. Until then, keep learning more about AWS from my previous blog posts :).

Good post

Thanks 👍 great explanation for Some core services

Thanks!

Great overview. I appreciate the explanation within the context of standard three tier web architecture. Helps put things in context. You should do one just like it covering the various AWS security and governance services.